- Published on

Einstein Trust Layer: The Backbone of Secure AI in Salesforce

- Authors

- Name

- Rishabh Sharma

Einstein Trust Layer: The Backbone of Secure AI in Salesforce

Introduction: Can You Trust AI With Your Most Sensitive Data?

Imagine you’re building the next generation of customer experiences with Salesforce’s AI-powered Agentforce. The possibilities are endless—automated case resolutions, personalized recommendations, and instant insights. But there’s a catch: your data is your crown jewel. How do you harness the immense power of AI without ever compromising on data security, privacy, or compliance?

Enter the Einstein Trust Layer—Salesforce’s answer to the toughest questions in enterprise AI. In this post, we’ll go deep into the technical architecture, real-world implications, and actionable strategies for developers. You’ll learn not just what the Trust Layer is, but how to leverage it to build secure, responsible, and future-proof AI solutions.

Table of Contents

- What is the Einstein Trust Layer?

- Why Trust Matters in Enterprise AI

- Core Features of the Einstein Trust Layer

- How the Trust Layer Works: A Step-by-Step Journey

- Real-World Scenarios: Trust Layer in Action

- Actionable Takeaways for Salesforce Developers

- Conclusion: Building AI You—and Your Customers—Can Trust

What is the Einstein Trust Layer?

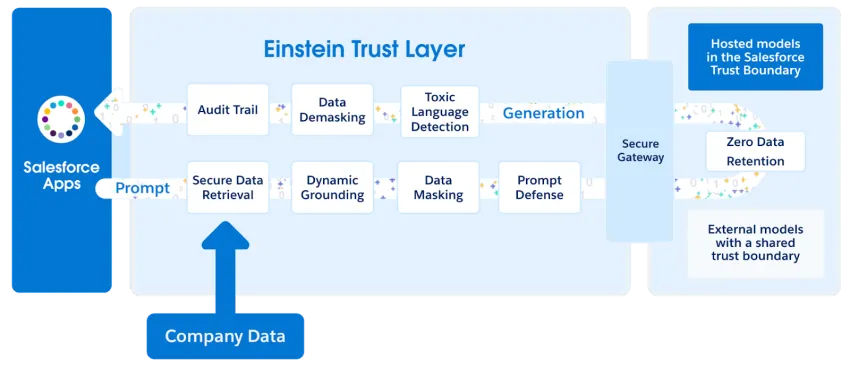

The Einstein Trust Layer is a security and governance framework built into Salesforce’s AI stack. It acts as a protective shield between your org’s data and the large language models (LLMs) powering Agentforce and other Einstein features. Its mission: deliver cutting-edge AI while ensuring your data never leaves your control.

Why Trust Matters in Enterprise AI

AI is only as good as the trust you place in it. In regulated industries—finance, healthcare, government—data privacy isn’t just a feature, it’s a mandate. The Einstein Trust Layer is Salesforce’s answer to questions like:

- Who can see my data?

- Where does my data go?

- Can the AI “learn” from my customer records?

- How do I prove compliance to auditors?

Unique Perspective:

Most AI platforms treat security as an afterthought. Salesforce bakes it into the foundation, making trust a first-class citizen in every AI workflow.

Core Features of the Einstein Trust Layer

Dynamic Grounding

Dynamic Grounding retrieves only the information needed for a prompt, and only if the user has access to it. This means AI responses are always contextually relevant and respect your org’s sharing rules and field-level security.

Example:

A support agent asks Agentforce for a customer’s order history. Dynamic Grounding ensures only orders the agent is allowed to see are included in the AI’s response.

Semantic Search

Semantic Search uses advanced machine learning to find relevant information—like Knowledge Articles or FAQs—based on the meaning of the prompt, not just keywords.

Example:

A user types “How do I reset my password?” and the Trust Layer surfaces the most relevant help articles, even if the wording is different.

Data Masking

Before any prompt is sent to the LLM, the Trust Layer scans for sensitive data—names, addresses, account numbers—and masks it. This ensures no customer PII ever leaves your org.

Example:

A prompt containing “Contact John Doe at 555-1234” is transformed to “Contact [NAME] at [PHONE]” before reaching the LLM.

Prompt Defense

Prompt Defense adds guardrails to every prompt, instructing the LLM to avoid hallucinations, stay on topic, and never return sensitive or inappropriate content.

Example:

The Trust Layer appends instructions like “Do not fabricate information. Only answer based on provided context.”

Zero Data Retention

Salesforce’s contracts with LLM providers prohibit them from storing or using your data for training. This means your data is never used to improve the LLM, and is never retained outside your org.

Example:

A healthcare org can use Agentforce AI without worrying that patient data will be used to train future models.

Toxic Language Detection

Every prompt and response is scanned for toxic, biased, or inappropriate language. If detected, the Trust Layer can block the response or flag it for review.

Example:

A user prompt or LLM response containing hate speech or personal attacks is filtered out before reaching the end user.

Data Demasking

After the LLM generates a response, the Trust Layer reverses the masking—replacing placeholders with the original customer data, but only for users with the right permissions.

Example:

The response “Contact [NAME] at [PHONE]” is demasked to “Contact John Doe at 555-1234” for authorized users.

Audit Trail

Every step—prompt, response, masking, toxic language scores, and feedback—is logged in an immutable audit trail. This provides full transparency and accountability for every AI interaction.

Example:

During an audit, you can trace exactly what data was sent, how it was processed, and what the LLM returned.

How the Trust Layer Works: A Step-by-Step Journey

- User Prompt: A user asks Agentforce a question or requests an action.

- Dynamic Grounding: The Trust Layer fetches only the data the user is allowed to access.

- Data Masking: Sensitive information is identified and masked.

- Prompt Defense: Guardrails are added to the prompt.

- LLM Processing: The masked, grounded prompt is sent to the LLM.

- Toxic Language Detection: The response is scanned for inappropriate content.

- Data Demasking: Masked data is restored for authorized users.

- Audit Trail: Every step is logged for compliance and review.

Real-World Scenarios: Trust Layer in Action

Scenario 1: Financial Services

A bank uses Agentforce to answer customer questions about account balances. The Trust Layer ensures only the customer’s own data is accessed, masks account numbers, and blocks any toxic or inappropriate responses.

Scenario 2: Healthcare

A hospital leverages Agentforce to summarize patient records. The Trust Layer masks all PII, ensures only authorized staff can view sensitive data, and logs every interaction for HIPAA compliance.

Scenario 3: Customer Support

A global retailer uses Agentforce to automate support. The Trust Layer grounds responses in the latest knowledge base, masks customer data, and provides a full audit trail for every chat.

Actionable Takeaways for Salesforce Developers

- Design for Least Privilege: Always use sharing rules and field-level security. The Trust Layer enforces them, but your data model should too.

- Monitor the Audit Trail: Use audit logs to review AI interactions, spot anomalies, and improve prompt engineering.

- Test Masking and Demasking: Validate that sensitive data is always masked before LLM processing and correctly restored after.

- Leverage Prompt Defense: Write clear, context-rich prompts and use the Trust Layer’s guardrails to reduce hallucinations.

- Stay Informed: The Trust Layer evolves—keep up with Salesforce release notes and best practices.

Conclusion: Building AI You—and Your Customers—Can Trust

The Einstein Trust Layer is more than a security feature—it’s the foundation for responsible, enterprise-grade AI in Salesforce. By understanding and leveraging its capabilities, you can build solutions that are not only powerful, but also secure, compliant, and trustworthy.

Ready to build the future of AI on Salesforce?

- Audit your org’s data access and sharing rules

- Experiment with Agentforce and the Trust Layer features

- Share your learnings with the Salesforce developer community

Trust isn’t just a buzzword—it’s the backbone of every great AI solution. With the Einstein Trust Layer, you can innovate with confidence.

Learn More: Trailhead and Salesforce Documentation

Explore these resources to deepen your understanding, stay up to date, and get hands-on with the Einstein Trust Layer in your own org.